A few weeks ago I was testing a new carbon dioxide monitor, the AIRVALENT CO2 Monitor, and I was interested to find a few ‘quirks’ with the measurements provided by it in comparison to my trusty Aranet4 Home monitors. Being someone who loves learning and experimenting, I decided to have a bit of a deeper look into how the AIRVALENT monitor’s data differed from my other monitors.

If you’ve ever looked to purchase or have purchased a CO2 monitor, you will likely have already heard the advice ‘only buy a monitor if it has a NDIR sensor’. NDIR stands for non-dispersive infrared, and these sensors are currently the gold standard for accuracy on consumer-grade monitors. The most popular NDIR sensors come from Sensirion and SenseAir, and you may have even heard of some popular models such as the SCD30, S8, or Sunrise sensor.

However, another type of sensor is picking up in popularity: photoacoustic sensors. These sensors are often chosen over NDIR sensors for several reasons, but they also have some downsides. Here is an explanation from my article:

Most traditional NDIR sensors in devices such as the Aranet4 Home and INKBIRD IAM-T1 are transmissive NDIR sensors. These sensors work by firing infrared light across a chamber towards an optical detector. Since carbon dioxide absorbs this light, the detector detects the amount of light energy that reaches the other end of the chamber. As the carbon dioxide concentration increases, the light reaching the detector decreases, and the sensor can then calculate the carbon dioxide concentration.

While this method is accurate, it also means the sensors have to be large, as there is a minimum required distance between the emitter and detector in order to get accurate results. For example, one common NDIR sensor, the SenseAir S8, has the following dimensions: 8.5 x 33.5 x 20 mm. While this might seem relatively small, it means devices housing this sensor have to be able to house the sensor alongside all the other required components. When coupled with a battery, screen, mainboard, and other components, carbon dioxide monitors quickly turn from small products to thick and relatively large devices.

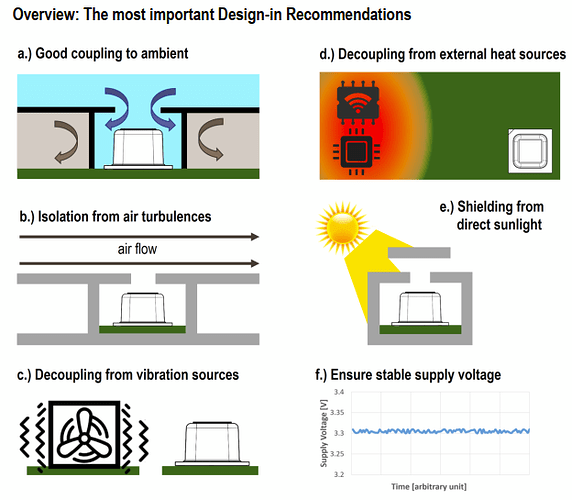

However, there is a way to decrease the size of the sensor – in this case, photoacoustic NDIR. The AIRVALENT CO2 Monitor uses a Sensirion SCD41, one of two models in Sensirion’s current photoacoustic range. Most interesting, this sensor is tiny compared to typical transmissive NDIR sensors.

As you can see in the image above, the SCD41 (the little silver box with a white cover) is incredibly small! This allows carbon dioxide monitors to be made much smaller than if they used an NDIR sensor.

About how photoacoustic sensors work:

Photoacoustic NDIR sensors operate using a slightly more complex concept. First, they fire an infrared light into the chamber, which will be absorbed by the carbon dioxide molecules. This will cause the molecules to vibrate, which leads to a pressure change in the chamber. This pressure change will cause a sound, which can be picked up by a microphone inside the chamber. Since the placement of the microphone isn’t as important in a photoacoustic sensor (since sound is omnidirectional), and since there isn’t a minimum distance needed for measurements to be taken, these sensors can be much smaller than transmissive NDIR sensors.

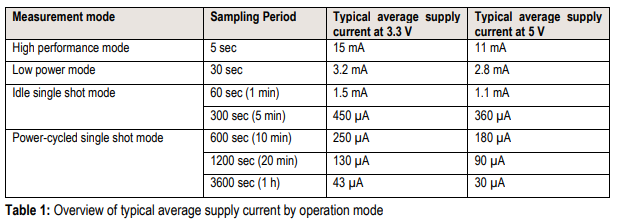

As you can see, the main reason for choosing a photoacoustic sensor is its size - they are tiny! In addition, there are other benefits, such as the price (photoacoustic sensors are often cheaper) and power consumption.

However, while I was testing the monitor’s accuracy against some Aranet4 Homes, I had some interesting results that I shared in the article. Having more time on my hands now, I wanted to dig further into the issue, so I made this thread. Firstly, a few notes:

-

This is far from scientific. I am using my Aranet4 Homes as a baseline, as they are the SenseAir Sunrise, the most accurate sensor I can access.

-

This isn’t an issue with the AIRVALENT monitor - it’s common across all photoacoustic sensors I’ve tried.

-

all monitors were calibrated in precisely the same conditions for every test below.

-

I am not an expert on this matter, so if you have any thoughts to add, please go ahead and do so. It would be great to hear if others have similar findings!

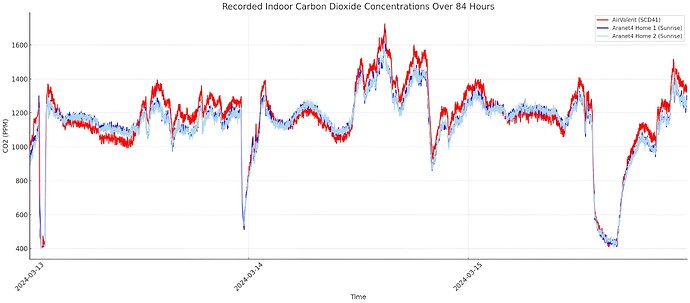

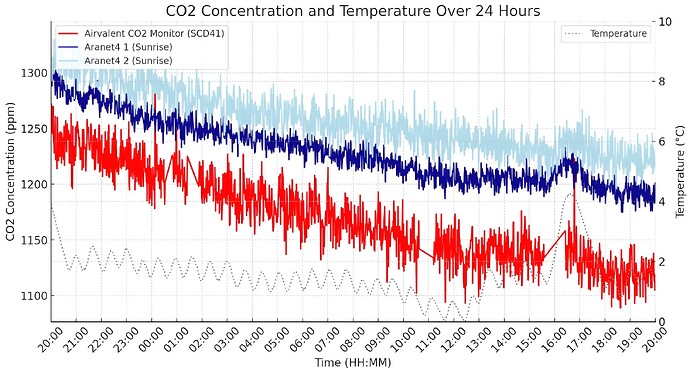

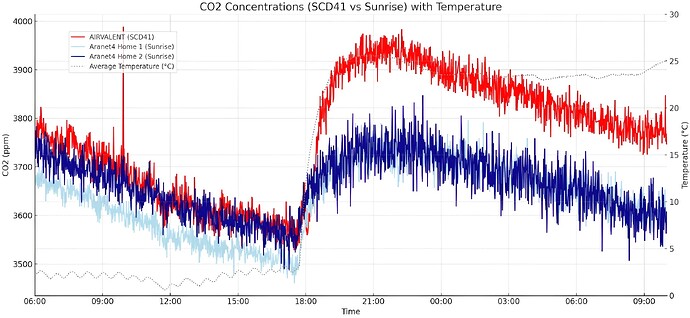

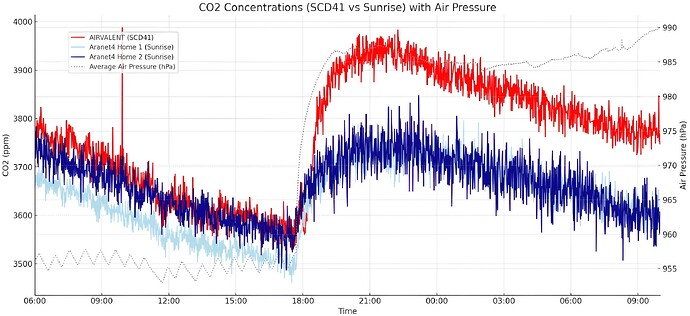

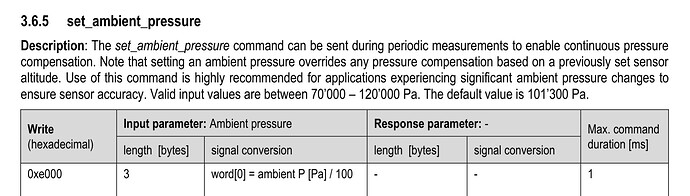

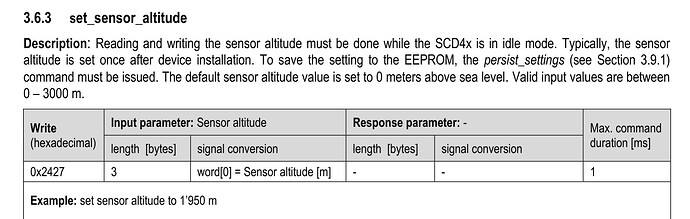

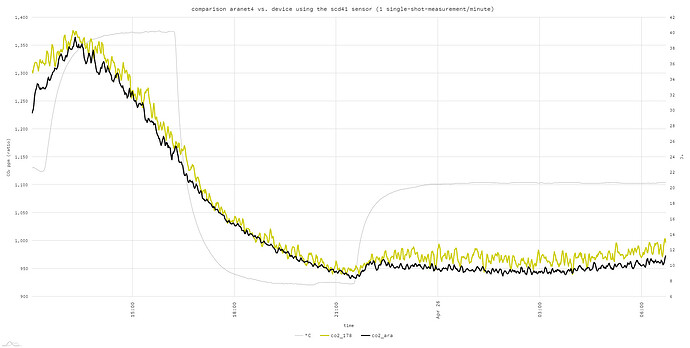

So, what are the ‘quirks’ I alluded to in the introduction? Well, firstly, I made the graph below, which shows the carbon dioxide concentrations as recorded by two Aranet4 Home monitors and my AIRVALENT monitor over about three days. When I first saw this graph, I wondered why the AIRVALENT with its SCD41 consistently read higher or lower than the Aranet4s. I guessed there might be another variable at play and went on to compare the temperature, relative humidity, and air pressure.

Above: without temperature.

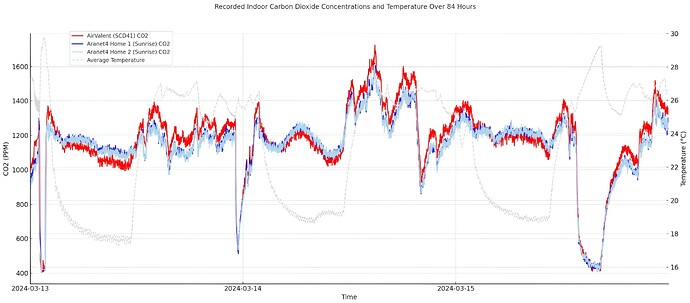

Below: with temperature.

As you can see, the SCD41 readings seem to be significantly impacted by temperature. The sensor consistently reads lower than the NDIR-equipped Aranet4 Homes at lower temperatures. At higher temperatures (above around 20 degrees Celsius), this swaps, and the SCD41 consistently reads higher - interesting!

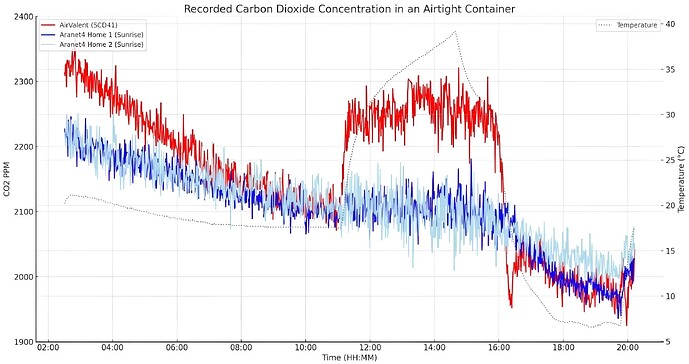

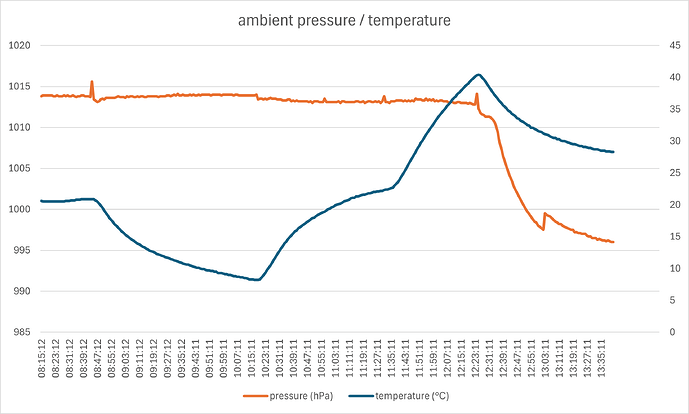

These were room tests, meaning that the monitors were all located right next to one another but that the CO2 concentrations also changed frequently. To rule out CO2 concentration as a variable, I placed the three monitors in an airtight container. I placed the container in a cold, moderate, and warm environment. Below are the results.

Unfortunately, I don’t have a lab or anywhere to control the temperatures, so this test was quite rudimentary. For the room temperature part of the test, I put the container in my room, which was around 20 degrees. I then took the box outside (into the Bangkok sun), which caused the box’s interior to reach almost 40 degrees. Finally, I placed the box in my fridge and set it to 6 degrees. As you can see, while the Sunrise sensors in the Aranet4 Homes gradually decreased (the box must not have been fully sealed), as expected, the SCD41 monitor jumped around a lot - presumably because of how temperature impacts photoacoustic sensors.

I did some research, and this is a common issue with photoacoustic sensors as they suffer from signal loss at higher temperatures. While there are compensation algorithms that can be used (and likely are with the SCD41 since it also houses a temperature sensor), they aren’t fully accurate - at least not if the Sunrise/Aranet4 is to be believed.

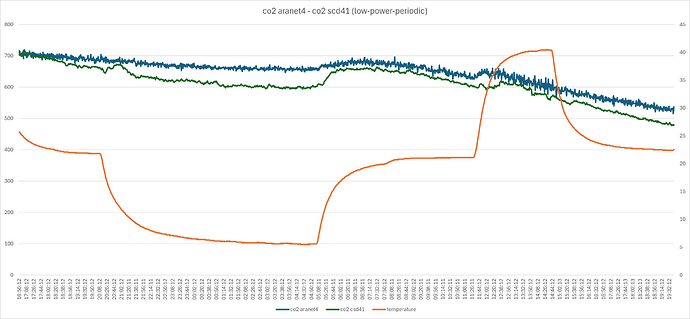

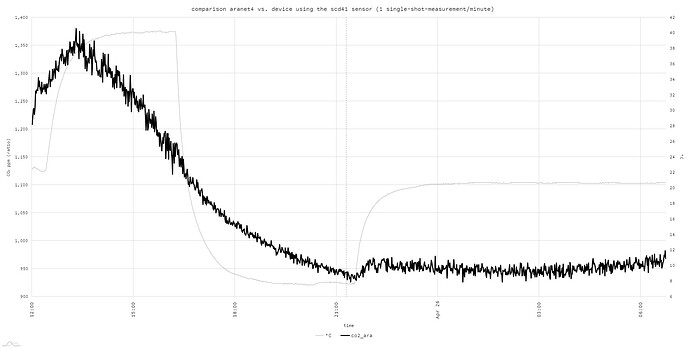

These graphs were all included in my full review of the AIRVALENT CO2 Monitor, but I wanted to dive deeper since I now have some more time to look into the matter. As such, I wanted to repeat the temperature tests but for longer periods of time to see if the results were consistent. Therefore, I took the three monitors out again the other day and decided to see how the SCD41 would perform. Again, I recalibrated all monitors in the same condition and placed them back into the plastic container. Below are the results over 24 hours at room temperature (I’m in Vietnam, so 25-30 degrees Celsius is typical indoors).

As you can see, the SCD41 sensor again records higher than the two Aranet4 Homes. Since I’ve found 19-22 degrees where the SCD41 begins to read higher than the Sunrise sensors, I was not surprised to see this result. As you will notice, some data is missing, but that is not an issue with the SCD41 (I’m not sure why it is missing, but it is an issue with the AIRVALENT monitor/app and not the sensor). While I was very frustrated to see this data was missing, there is still around 18 hours of data, which I believe is enough for a second room-temperature test.

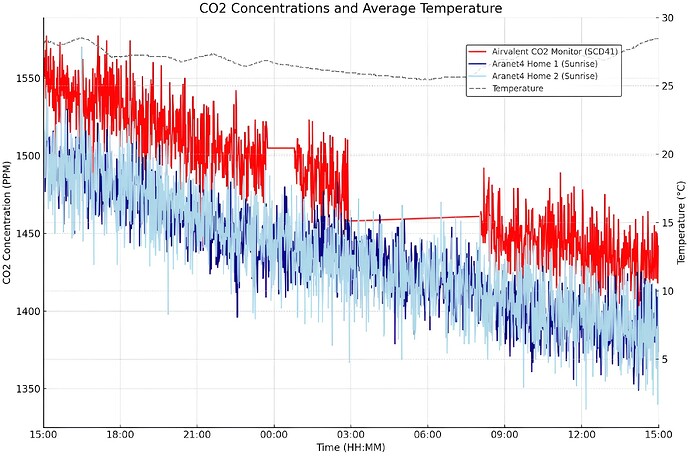

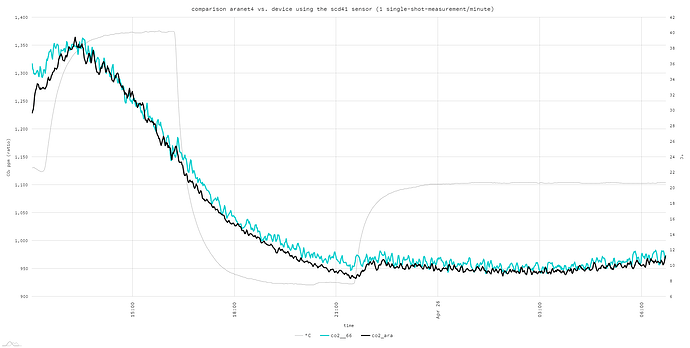

Of course, I also wanted to test the SCD41 at lower temperatures over 24 hours, which meant all three monitors had to go back to the fridge. Here are the results from that test:

As you can see, the temperature in this test was consistently between 4 and 0 degrees Celsius (likely due to the fridge cooling activating at a certain threshold). Again, the SCD41 performed exactly as expected by reporting lower values than the Aranet4 Homes and their Sunrise sensors at cooler temperatures. In this graph, there is a larger difference between the two Sunrise sensors, but the SCD41 still reports significantly lower than both.

I would love to repeat this test again with the monitors in a hot environment, but unfortunately, I don’t know how I can maintain a high temperature for 12-24 hours. In Thailand, I placed the monitors on the balcony, and they nearly reached 40 degrees. I don’t have a balcony here in Vietnam, so I will need to find another way to maintain such a temperature for a decent length of time. Once I get a chance to perform this test, I expect to see the SCD41 report significantly higher once again!

Learning more about how these sensors perform and how environmental factors and other variables impact them has been fascinating. While this might seem like I’m saying you should avoid any monitor with the SCD41, this isn’t at all the case. Again, here are my own words from the full review:

So, what does this mean? Well, it might not mean much for you if you intend to use the device indoors. The device’s accuracy becomes relatively poor above around 30 degrees Celsius, and these are temperatures you’ll usually only experience outdoors or during summer. Indoors and in controlled environments, you likely won’t notice this issue much as the device is accurate. However, it’s essential to know about this issue so you can compensate for it. Unfortunately, nothing in technology seems purely beneficial, and the SCD41 makes a few compromises to be incredibly small.

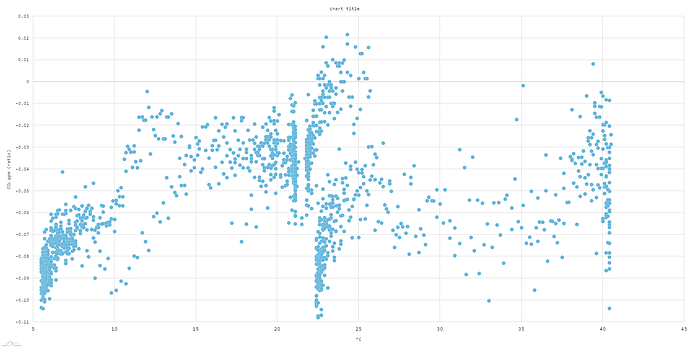

In conclusion, the sensor has some fantastic advantages, such as its tiny size and cost. However, you should be aware of the caveats with the SCD41 and other photoacoustic sensors so you know when they might not be entirely accurate. In my own testing, I found the SCD41 could read as much as 150 ppm (at only 2000 ppm) higher than the Sunrise at 35-40 degrees Celsius. While this is in line with the SCD41 stated accuracy (at 2000+ ppm, it is ±40 ppm ±5.0% MV), I’ve found that real-life sensors tend to perform better than the stated accuracy in typical conditions. A great example of this is the Aranets in these, which were extremely consistent throughout the tests.

Of course, you must also ask yourself if this difference even matters to you. In many of these tests, the difference between the SCD41 and Sunrise sensors was only 50 - 100 ppm. Would that difference actually matter to you in real life, or would you act the same if you saw your monitor reporting 1850 ppm compared to 1950 ppm? At the end of the day, I would take the same actions, trying to improve ventilation or masking up if needed. Even in the worst case, at around 35 - 40 degrees, the difference was only around 150 ppm.

There are a couple more SCD41 devices that I want to test.

Since I’ve already jumped into this rabbit hole, I will likely update this post soon. I would love to get a larger sample of sensors to test, and I am currently trying to find a way to gather data from two more SCD41 devices that I have. I would also like to test the SCD41 against the SenseAir S8, as I have four of these sensors sitting around (as opposed to only two Sunrise sensors). Right now, I feel like a lack of a larger sample size is causing me the most doubt about these results, and I will prioritise gathering more information. In the meantime, if you know anything about this issue, I would love it (and appreciate it!) if you could chime in with your experiences.